Quick Takes: Sensor Suites and Higher-Level Autonomy

LeddarTech, Renesas and Coast Technologies execs share their views

(Images: LeddarTech)

Lidar developer LeddarTech is hosting a series of webinars on autonomous driving and new mobility services. The latest session focused on Multiple Sensing Modalities: The Key to Level 3-5 Autonomy.

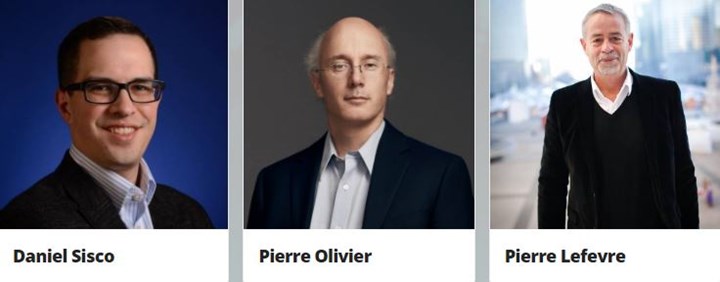

Who?

The panel featured a pair of similarly named CTOs: Pierre Oliver of LeddarTech and Pierre Lefrevre of Coast Autonomous, a mobility-as-a-service and self-driving vehicle developer. They were joined by Daniel Sisco, senior automotive director for chip giant Renesas.

Here are a few highlights and insights:

Putting the Plus in L2

Oliver: “Level 2 is the first stage where the car starts to drive itself. But it’s not very good. …. Level 2+ is Level 2 that works. It’s what most people would like today.”

Coast’s Mission

Lefrevre: “We don’t build autonomous vehicles. We build road missions with a focus on safety.

“This requires both long- and very close-range perception… (and) a lot of redundancy in field of view, range and technology.”

On Lidar

Oliver: “We’re still trying to catch up and deliver lidar that is at a similar maturity level as cameras and radar. Getting sufficient processing at an affordable cost and power budget remains a challenge.”

Catching Up to Humans

Sisco: “The human brain does incredible parallel processing that we are struggling to emulate from the compute perspective. You can have the largest sensor suite in the world. But at the end of the day you have to more or less serially compress all of that down to a scene and make decisions based on the environment.”

“Is there a sensor that replicates the instinct of a human, who may see some flash of light or hear the tires on the road to sense something has gone wrong and feel that the vehicle is drifting a little, so I know, because of my brain and experience that I probably have a flat tire? We have to artificially replicate these in autonomous systems.”

Improving ADAS

Oliver: “ADAS is very much about safety and convenience. But all evidence shows that ADAS today is not very good in instrumented tests or consumer feedback. Many people turn off these features because they aren’t delivering what they claim. There is room for much improved ADAS.”

Computer Power and the Cloud

Sisco: “Compute power per watt is key. Cooling systems, power dissipation, even generating the power to run these ECUs has a big effect on the vehicle system overall.

“We have to think how we interact with that cloud, during run time, development, testing. How do we get data back and forth between the cloud and the edge and what role does it play?”

Innovation Needed

Oliver: “We can all use better sensors and faster processing.

“The key is in the processing and perception. It’s about how can the automated vehicle better emulate the behavior of the human?

“How do you better process all the sensor input and leverage the cues and infrastructure to deliver solutions that match or come close to human drivers?”

RELATED CONTENT

-

Mustang Changes for 2018

On Tuesday Ford unveiled—using the social media channels of actor Dwayne Johnson (this has got to unnerve some of the auto buff book editors)—the 2018 Mustang, which has undergone some modifications: under the hood (the 3.7-liter V6 is giving way to a 2.3-liter EcoBoost four, and a 10-speed automatic is available), on the dash (a 12-inch, all-digital LCD screen is available for the dashboard), at the tires (12 wheel choices), on the chassis (MagneRide damper technology is being offered with the Mustang Performance Package), and on the exterior (three new paint colors). And while on the subject of the exterior, there are some notable changes—a lower, remodeled hood, repositioned hood vents, new upper and lower front grilles, LED front lights, revised LED taillamps, new rear bumper and fascia.

-

On Automotive: An All Electric Edition

A look at electric vehicle-related developments, from new products to recycling old batteries.

-

Multiple Choices for Light, High-Performance Chassis

How carbon fiber is utilized is as different as the vehicles on which it is used. From full carbon tubs to partial panels to welded steel tube sandwich structures, the only limitation is imagination.